What Kind of AI Teammates Do We Want? Four Insights from Creative Fiction

The story of Klara and the Sun and what it tells us about our human-AI teams

Mr Capaldi believed there was nothing special inside Josie that couldn’t be continued. He told the Mother he’d searched and searched and found nothing like that. But I believe now he was searching in the wrong place. There was something very special, but it wasn’t inside Josie. It was inside those who loved her.

― Klara, Klara and the Sun.

Welcome to a new issue of Culturescapes!

As LLMs are transforming our work tools to workplace teammates, the question that comes naturally is this: what kind of teammate do we want our AI teammates to be? It’s such an elusive question to ask from such a premature state of human-AI teaming, but it’s not as far as you think. Let’s put this question into a few scenarios. You are a team of five, and one of your teammates is an artificial teammate who drinks the hose of data in your team, facilitating every possible interaction, providing meeting notes, making Slack jokes, and supporting the team’s decision-making with incredible insights that you haven’t even aware of. One day, you realize you made a mistake and don’t want your boss to learn about it. Will your artificial teammate cover your mistake so it doesn’t escalate into something serious? Or will it inform on you? You have been sick the entire week but are out of your sick days and slow in your tasks. Will your AI teammate empathize with you, or will it add more to your plate? Your team achieved a milestone, and the success is due to you and your AI teammate. How would the two of you split up the recognition?

Luckily, we have a fantastic space to look at to get some hints at the answers to these questions: the world of fiction. Fiction offers a rich landscape for exploring human-AI dynamics, blending possibilities with emotional and ethical depth. Various movies and novels speculate on the relationship between humans and artificial companions. I will tap into the novel genre and share some notes on this question.

Recently, I read Kazuo Ishiguro’s Klara and the Sun, which tells the story of a little girl, Josie, and her artificial friend (AF), Klara. The story begins with Klara in the robot store, waiting to be purchased by someone. We see the world through her observant eyes, noticing subtle cues and gestures like a small kid. She has a special relationship with the sun, which will reveal itself later in the story. The day finally arrives, and she gets picked up by Josie and her mom. The story then evolves around Klara building a relationship with Josie and her mom. Later, we learn Josie struggles with health issues. That’s why mom decided to buy Klara as a companion to Josie. I will not spoil the rest of the story for people who want to read it themselves. However, I found the author's gradual building of a human-AI relationship inspiring.

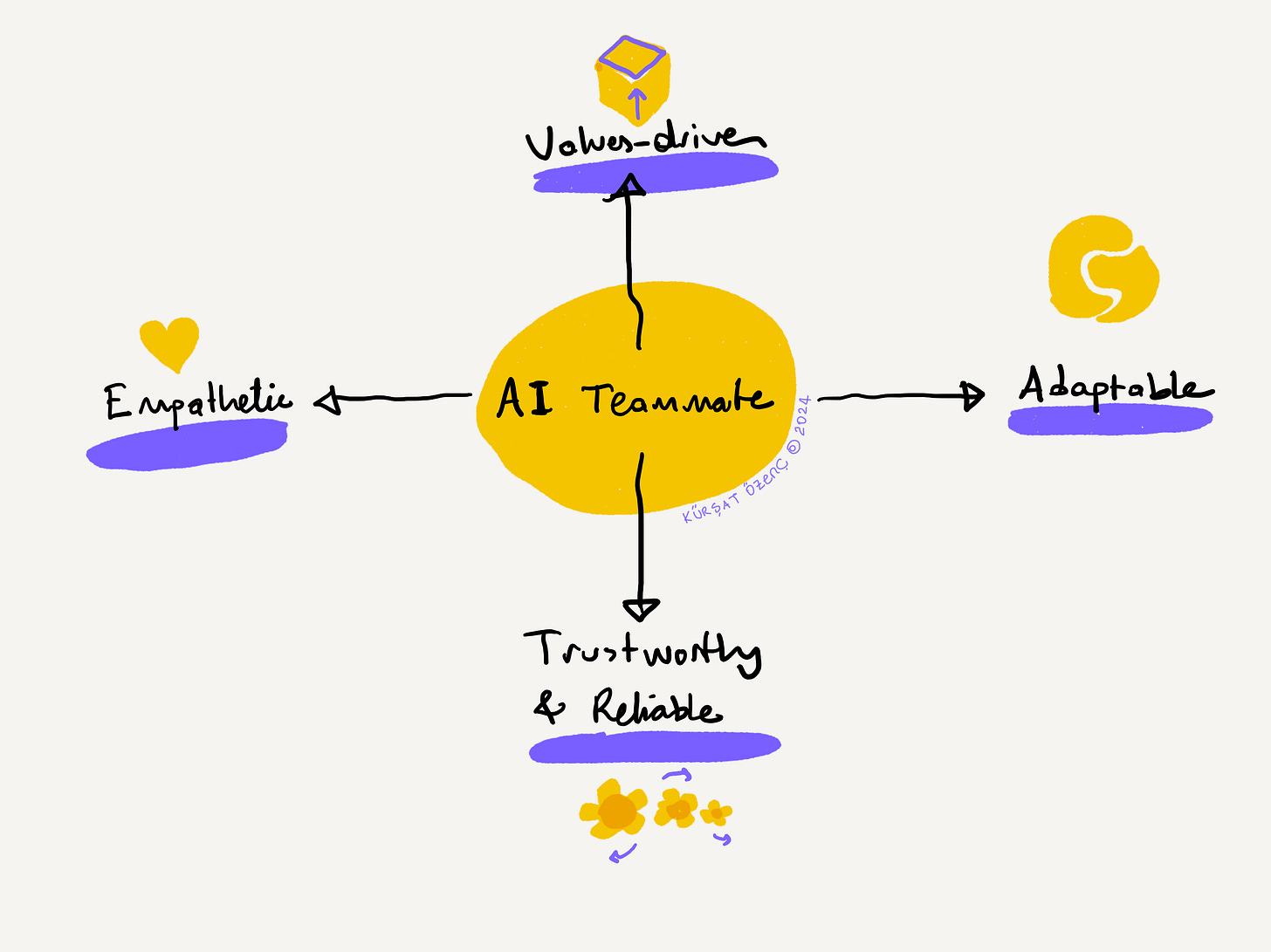

I will share four moments to give us insights into why we need AI teammates who are trustworthy, empathetic, adaptable, and values-driven.

AI-Teammate with Empathy

Klara is in the store, waiting to be picked up by a human. She spends hours in the store observing customers and their interactions with the AFs on display. She notices subtle details, such as how people express emotions through body language and tone of voice. She sees a beggar and his dog from a distance when she's in the storefront. One day, she observes both of them are still, not moving, and thinks that they are both dead. She panics and wants to tell others about it. The next day, to her amazement, the beggar and the dog cheerfully enjoy their morning. She gets relieved and relates their return to life to the sun. Her acute observational skill becomes crucial when Klara later integrates into Josie’s household as she learns to read and interpret people’s emotions.

The first insight from this scene is the importance of empathy and empathy-driven behaviors. The scene underscores Klara's role as a reflective mirror, interpreting and responding to human behaviors with empathy. It highlights how effective AI teammates must be designed to observe and adapt to human interaction's nuances.

AI-Teammate with Trustworthiness

In the beginning, everyone in the family views Klara with skepticism. Over time, they grow to trust Klara through shared experiences, such as Klara accompanying Josie during lonely moments or offering emotional support during challenging times. Klara’s consistent presence and competence solidify this trust. She even earns the trust of highly skeptical house nanny Melania with her unwavering support for Josie.

This gradual building of trust mirrors real-world dynamics in human-AI collaboration. It emphasizes the importance of designing AI interactions that demonstrate reliability and competence over time, fostering deeper human-AI relationships.

AI-Teammate with Values

When Josie’s mother suggests that Klara might "replace" Josie if her health deteriorates, Klara’s loyalty to Josie drives her to reject the idea. Instead, Klara seeks unconventional solutions to save Josie, like appealing to the Sun for healing. In this instance, as readers, we root for Klara to push the boundaries to help sick Josie. However, we could also feel uneasy about her loyalty in a less charitable scenario.

This moment raises critical ethical questions about autonomy, the boundaries of AI actions, and the potential consequences of programming AI with unwavering loyalty. It illustrates the need for values---ethical frameworks that allow AI to balance loyalty with broader moral considerations.

AI-Teammate with Adaptive Mindset

Klara sometimes misinterprets human social cues and motives, such as her confusion over Josie’s mother’s request to "become Josie." Klara’s literal interpretation of the situation reveals her limitations in understanding the emotional complexity behind the request.

This highlights the risks of misalignment in human-AI goals and perceptions. Designing AI systems that can better interpret and adapt to human emotions and intentions is crucial to prevent such disconnects in real-world situations.

Fiction like Klara and the Sun offers us to imagine plausible futures that can inform the practical design of our AI teammates. We can summarize our learnings with the following:

Build AI systems that prioritize empathy and adaptability.

Design mechanisms for trust-building over time.

Incorporate ethical decision-making tools that align with human values.

Address misalignments proactively through transparent interaction models.

I am curious about what other fiction is out there to inspire us in crafting human-AI teams. What novels, movies, or TV series are coming to your mind, and what do they mean to you? Please share them in the comments below.

Also, a big thanks for responding to the poll I conducted in the last issue. You chose organizational culture/rituals and human-AI interactions as the highest-ranking topics. These will help me prioritize my writing in the new year.

What I am reading, listening to

This NYT Magazine article, "Don’t Say ‘Macbeth’ and Other Strange Rituals of the Theater World, "is a great read to sneak a peek at the backstage of theatre. The authors mention so many rituals and artifacts; their rich work can easily be called a small but mighty ethnography project.

And that’s a wrap for this issue. Until next time, take good care of yourself and your loved ones!